Learn without labels: a summary of recent advances in contrastive learning

By Tony Z. Zhao

In this post, we are going to decode some very recent advances in contrastive learning including SimCLR and supervised contrastive learning. We will take a deep dive into contrastive learning algorithms and try to understand the intuition behind it. For context, we are going to assume basic knowledge of deep learning.

Introduction

Sensors can give us incredibly detailed measurements: even our cell phone cameras are capable of taking photos that have more than 10 million pixels. While human beings are clearly able to interpret such high-resolution images, it is extremely hard for computers to do so.

For example, in the image above, humans can easily describe the image: a cat under a blanket on a sofa. However, for computers it is just an uninterpretable array of pixels corresponding to RGB values. Ideally, we would like computers to be able to interpret images in a similar fashion to humans: by generating a short “summary” of the image that contains high-level features.

In modern machine learning, this is normally referred to as “representation learning”. We want to learn a network that maps a big image to a small vector, thus throwing away details in the picture and instead focusing on more abstract features. In the cat image above, we want our small vector to contain the information that there is a cat in the image, instead of what every individual strand of fur looks like. Because our learned representation is much more concise while retaining the important information, other tasks (such as classification or object detection) that originally took in raw images can be learned much faster by using our low-dimensional input representation instead.

One way to find such an encoder is to take an image classifier that is trained on a large labeled dataset (e.g. ImageNet), and remove the last linear+softmax layer that produces class labels. This is commonly referred to as ‘pre-training’. We observed that such a pre-trained encoder is able to vastly outperform randomly initialized ones when we train a new linear layer that takes in the encodings and output class labels. This happens even when the new dataset contains very different classes and images compared to the pre-training dataset. Intuitively, we know that such an encoder produces semantically meaningful features because we can obtain the labels with just one more layer in the original classifier. This also follows the intuition that conv nets are gradually recognizing more abstract features as it goes deeper. The figure below is a visualization for a VGG16 network trained on ImageNet. It shows the image that is going to “excite the layer” the most. We see that the image starts from random color blocks to more complex features like dots and circles, and to the image that looks like eyes in layer 5.

However, such supervised pre-training approach is limited because hiring humans to label data is expensive. It is also deeply unsatisfying because humans do not need labels when learning to perceive the world. It would be great if our algorithm is able to leverage the vast amount of unlabeled images on the internet and somehow obtain a meaningful representation on its own. This is exactly the setting that contrastive learning is trying to solve and is commonly referred to as unsupervised representation learning.

Our evaluation metrics for the learned representation is simple: we freeze the encoder and append a randomly initialized linear+softmax layer to the end of the encoder. Then train this model with labeled data to obtain the test accuracy. If the accuracy is high, we know that our unsupervised representation learning algorithm has managed to capture the underlying structure of unlabeled images: it “clusters” images in a way such that even a linear decision boundary can separate different classes. Figure below illustrates a “good” vs “bad” representation.

Contrastive learning has proven to be one of the most promising approaches in unsupervised representation learning. With the evaluation metric described in the last paragraph, contrastive learning methods are able to outperform “pre-training” methods which require labeled data. The idea behind contrastive learning is surprisingly simple: the model learns to encode images in a lower dimensional space in such a way that images that are similar semantically will be close to each other in the low dimensional space, and at the same time far away from other images. For example, we would want the representation of cats to be close to other cats and far away from representation of pigs. In the rest of this blog post, we will elaborate on this intuition and describe how it is implemented step by step.

Zooming out, there are multiple ways we can do unsupervised representation learning. Some widely used ones include Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs). Unlike contrastive learning, which only trains an encoder, both VAE and GAN have decoders that are capable of transforming the learned low-dimensional representation back to the original image. From a high level, they make sure the representation captures the relevant information by optimizing this decoder reconstruction loss. In contrastive learning, however, we directly try to regulate the distance between images within the learned representation space: the representation of a cat image shall be close to that of other cat images, while also being far away from representations of pig images.

How does contrastive learning work?

In contrastive learning, we are trying to find the parameters of our encoder that minimize the contrastive loss on our unlabeled dataset. Contrastive loss implements the “contrastive” idea verbatim: in the learned representation, we want images that are similar to be close to each other, and images that are different to be far away from each other.

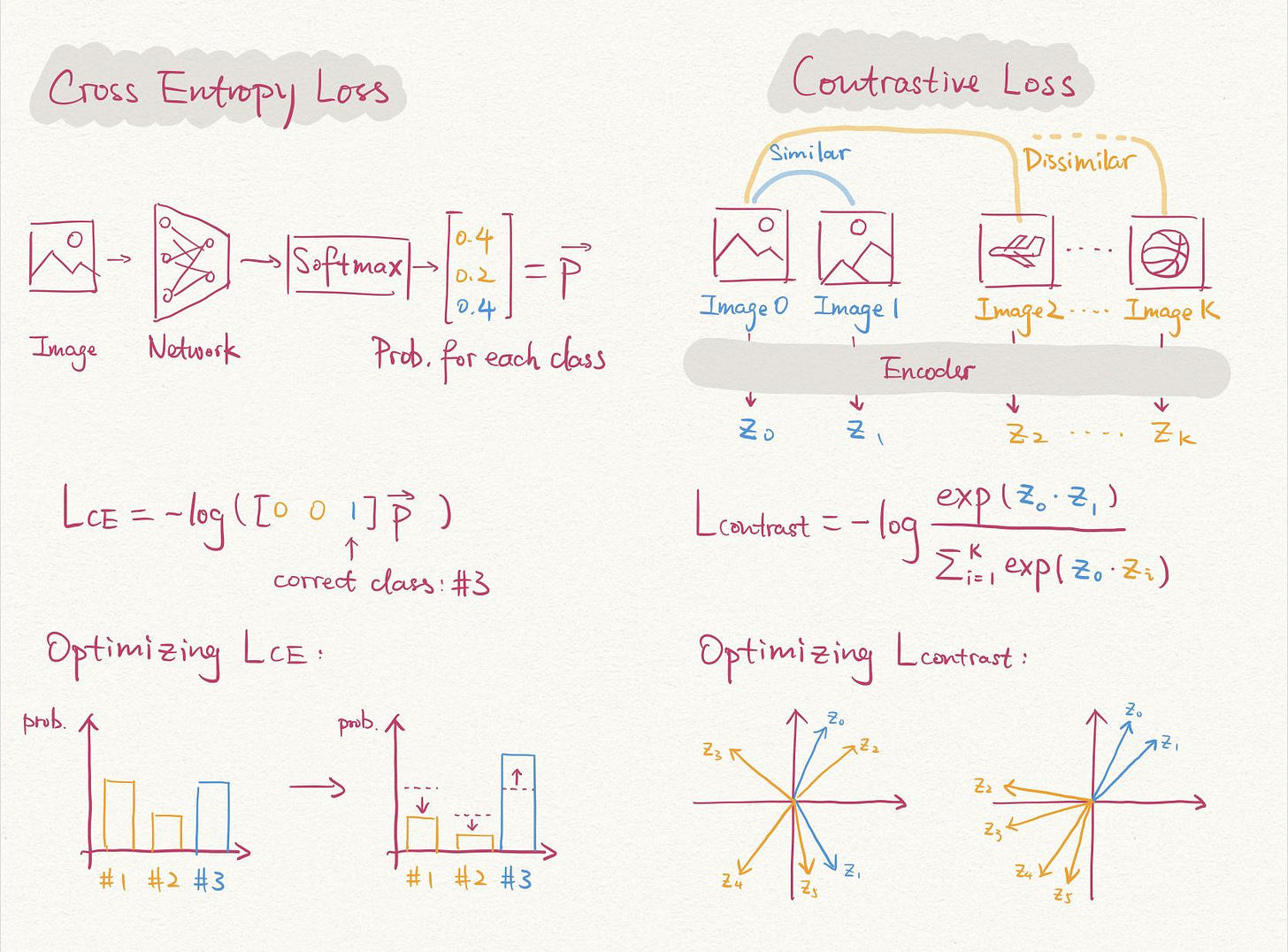

Concretely, the contrastive loss used by recent methods looks extremely similar to a normal cross-entropy loss in classification. When we optimize a normal cross-entropy loss, we are pushing up the probability of the correct class and pushing down the probability of the incorrect class at the same time, since the softmax of all classes always sums to 1. This turns out to be exactly what we want in contrastive learning: we want to push up the similarity of representations if two images are similar, and push down the similarity when two images are not similar. (It might be unclear how we can judge if two images are similar when we do not have access to labels, but bear with me: we are going to explain this in the next section!)

Comparing cross entropy loss and contrastive loss used in SimCLR

As shown in the figure above for contrastive learning, we first sample k images from the dataset for calculating the contrastive loss. Image 0 and 1 are similar images that are different from the rest of {Image2 … Imagek}. Then we use the encoder to obtain the representation for each of the images {z0 … zk}. We use a dot product as a measure of similarity between vectors. Minimizing the contrastive loss will increase the dot product between z0 and z1 and decrease the dot product with the rest of images. As a result, the representations of Image 0 and 1 (blue arrows) become closer to each other while further away from the rest of representations (yellow arrows).

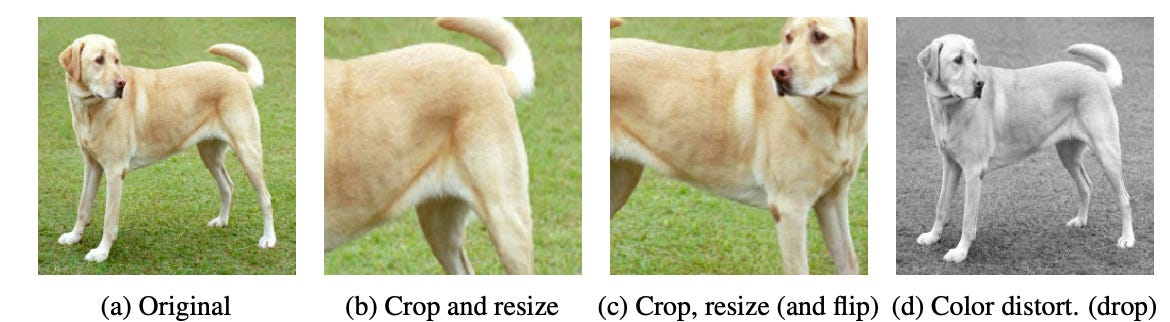

SimCLR: a simple framework for contrastive learning

As described in the last section, we need to know whether two images are similar to each other or not to calculate the contrastive loss. However in the unsupervised representation setting, we do not have access to any labels. In SimCLR, this problem is addressed with data augmentation. It follows the simple reasoning that augmentations of the same image are similar to each other and augmentations of different images are not. In SimCLR, data augmentation is utilized very differently compared to normal computer vision algorithms when we try to create more data and make the model more robust. We randomly sample two different augmentations and apply them separately to the same image to obtain the two different versions. As shown in the figure above we will obtain (b) and (c) by augmenting (a) differently. Then (b) and (c) will be regarded as similar images when we calculate the contrastive loss.

Data augmentation in SimCLR. These images are regarded as similar when calculating the contrastive loss

SimCLR also has a simple training pipeline: in a sampled mini-batch of size N, each image is augmented twice with two different randomly sampled augmentation functions. We will refer to augmentations from the same image as “siblings” in this post. Then within the mini-batch, each augmented image is contrasted positively with its sibling and negatively with the rest of the images, a total of (N-1)x2. The contrastive loss is propagated backward to update the encoder.

SimCLR’s training pipeline

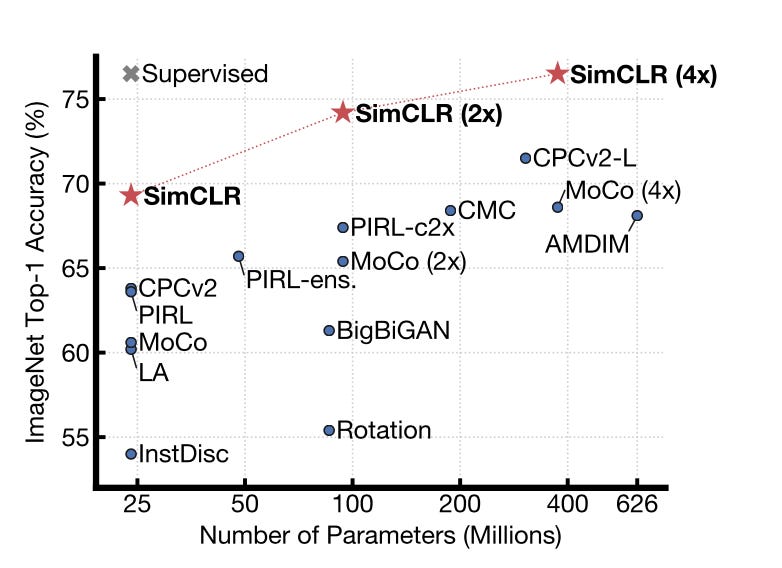

Empirically, SimCLR achieves a big performance gain compared to previous methods. It is able to achieve similar performance compared to the standard supervised pre-training and fine tuning pipeline. This suggests that the gap between unsupervised and supervised representation learning is largely closed in many vision tasks. Below is a plot showing the accuracy of a trained linear classifier that takes in learned representations and output class labels. SimCLR outperforms previous unsupervised methods like BigBiGAN and MoCo and achieves similar accuracy to the supervised methods where the model is trained end-to-end. As a refresher, for all unsupervised methods shown in this plot, the encoder is frozen and only the linear classifier is trained with labeled data.

ImageNet Top-1 accuracy of linear classifiers trained on representations learned with different self-supervised methods. Gray cross indicates supervised ResNet-50. (Image and caption from SimCLR paper)

Contrastive learning + Supervised learning

Contrastive learning achieves state-of-the-art performance in unsupervised representation learning, outperforming some of its supervised counterparts. It turns out we can also leverage the insights of contrastive learning in standard supervised learning settings, say in the training of an ImageNet classifier. As introduced in paper “Supervised Contrastive Learning”, supervised contrastive loss obtains the state-of-the-art performance on ImageNet with a 78.8% accuracy, which is a 1% improvement compared to standard classifier trained with cross-entropy loss. It also has a unique training pipeline that differs from standard supervised learning setting.

Normally in supervised learning, we train a classifier end-to-end. That is, we input the image to the neural network, and calculate the cross-entropy loss with respect to the one-hot encoded vector of the label. However, in supervised contrastive learning, the training procedure is split into two stages. In the first stage, we learn an encoder that encodes the image into representations by optimizing the supervised contrastive loss. We then freeze the encoder and append an additional linear layer that takes in learned representations and outputs class labels. The second stage is trained in the same way as standard supervised learning.

Supervised contrastive loss leverages the fact that we have access to class labels when contrasting different images. Unlike in the unsupervised case when we need data augmentation to obtain semantically similar images, we can now simply compare the images’ labels to decide whether their representation should be “pulled together” or “repelled further from each other”.

Figure comparing supervised vs unsupervised contrastive learning, from supervised contrastive paper

This resolves the problem in the unsupervised setting when we might consider different images from the same class to be dissimilar, and thus repelling their representations. As illustrated in the figure above, on the left we have supervised contrastive. All images belonging to “Class 1”, in this case dogs, will be pulled together in the learned representation. At the same time they will be repelled by “Class 2”, in this case cats. On the right of the figure we have self-supervised contrastive*, which includes methods like SimCLR we just discussed. In this case the “positive” class consists of augmentations of the same image and will be pulled together. But on the right we can no longer select images from different classes because we do not have labels. Thus any other images in the dataset can be on the right as “negatives”. This creates the problem that there might also be dogs on the right. What we are doing effectively is letting one image of the dog be far away from another, which is not ideal for learning a good representation that captures semantic meanings.

* self-supervised and unsupervised are synonyms. They both refer to training without human annotation.

Implications and Conclusion

Over the past few years, ImageNet pre-training has become a standard practice in computer vision. When data is scarce, it is beneficial to use supervised pre-trained networks to produce meaningful representations. Contrastive learning demonstrates that we can do just as well without any labeled data. Instead, the vast amount of unlabeled data can all be leveraged to train a powerful encoder by optimizing the contrastive loss. Humans are creating data faster than ever before. The ability to leverage such data without any slow and expensive human labeling is a big step forward.

Beyond computer vision, unsupervised representation learning has already been revolutionizing fields like Natural Language Processing, with models like BERT and GPT trained on unlabeled texts dominating almost all benchmarks. It will be exciting to see if the idea of contrastive learning can also be applied to domains outside of computer vision like voice recognition or processing high dimensional medical data.

References

SimCLR: https://arxiv.org/abs/2002.05709

Supervised contrastive: https://arxiv.org/abs/2004.11362